1 데이터 인코딩

2 레이블 인코딩(Label encoding)

from sklearn.preprocessing import LabelEncoder

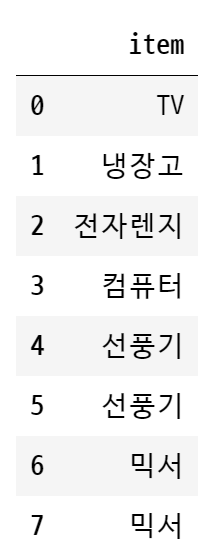

items = ['TV','냉장고','전자렌지','컴퓨터','선풍기','선풍기','믹서','믹서']

# LabelEncoder 클래스를 encoder 객체로 생성한 후

encoder = LabelEncoder()

# fit은 transform 수행 전 틀을 맞춰주는 역할

encoder.fit(items)

# encoder.transform( ) 으로 label 인코딩 수행.

labels = encoder.transform(items)

print('인코딩 변환값:', labels)

>>> 인코딩 변환값: [0 1 4 5 3 3 2 2]

print('인코딩 클래스:', encoder.classes_)

>>> 인코딩 클래스: ['TV' '냉장고' '믹서' '선풍기' '전자렌지' '컴퓨터']

print('디코딩 원본 값:', encoder.inverse_transform([0, 1, 4, 5, 3, 3, 2, 2]))

>>> 디코딩 원본 값: ['TV' '냉장고' '전자렌지' '컴퓨터' '선풍기' '선풍기' '믹서' '믹서']

3 원-핫 인코딩(One-Hot encoding)

3.1 (1) sklearn에서의 원핫 인코딩

<sklearn에서의 원핫 인코딩은 좀 복잡하다>

첫번째, 먼저 숫자값으로 변환을 위해 LabelEncoder로 변환합니다.

두번째, 2차원 데이터로 변환합니다. (reshape 활용)

세번째, 원-핫 인코딩을 적용합니다.

from sklearn.preprocessing import OneHotEncoder

import numpy as np

items=['TV','냉장고','전자렌지','컴퓨터','선풍기','선풍기','믹서','믹서']

# 첫번째, 먼저 숫자값으로 변환을 위해 LabelEncoder로 변환합니다.

encoder = LabelEncoder()

encoder.fit(items)

labels = encoder.transform(items)

labels

>>>

array([0, 1, 4, 5, 3, 3, 2, 2])

# 두번째, 2차원 데이터로 변환합니다.

labels = labels.reshape(-1, 1)

labels

>>>

array([[0],

[1],

[4],

[5],

[3],

[3],

[2],

[2]])

# 마지막으로 원-핫 인코딩을 적용합니다.

oh_encoder = OneHotEncoder()

oh_encoder.fit(labels)

oh_labels = oh_encoder.transform(labels)

print('원-핫 인코딩 데이터')

print(oh_labels.shape)

oh_labels.toarray()

>>>

원-핫 인코딩 데이터

(8, 6)

array([[1., 0., 0., 0., 0., 0.],

[0., 1., 0., 0., 0., 0.],

[0., 0., 0., 0., 1., 0.],

[0., 0., 0., 0., 0., 1.],

[0., 0., 0., 1., 0., 0.],

[0., 0., 0., 1., 0., 0.],

[0., 0., 1., 0., 0., 0.],

[0., 0., 1., 0., 0., 0.]])3.2 (2) 판다스의 원핫 인코딩

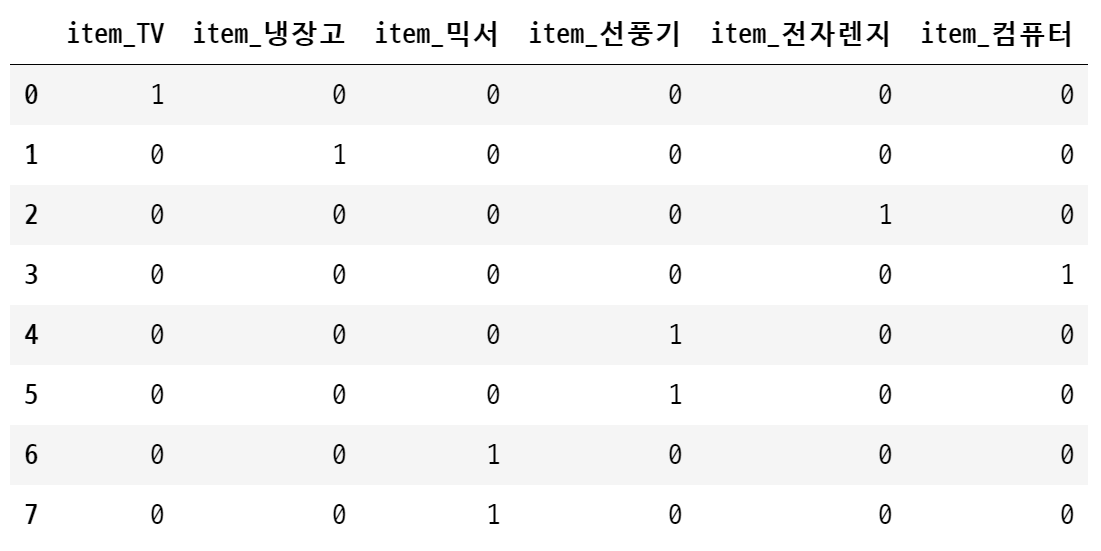

판다스의 get_dummies 함수를 이용하면 쉽게 원핫 인코딩이 가능하다

import pandas as pd

df = pd.DataFrame({'item':['TV','냉장고','전자렌지','컴퓨터','선풍기','선풍기','믹서','믹서'] })

df

pd.get_dummies(df)

4 피처 스케일링과 정규화

4.1 (1) StandardScaler

from sklearn.datasets import load_iris

import pandas as pd

# 붓꽃 데이터 셋을 로딩하고 DataFrame으로 변환합니다.

iris = load_iris()

iris_data = iris.data

iris_data더보기

array([[5.1, 3.5, 1.4, 0.2],

[4.9, 3. , 1.4, 0.2],

[4.7, 3.2, 1.3, 0.2],

[4.6, 3.1, 1.5, 0.2],

[5. , 3.6, 1.4, 0.2],

[5.4, 3.9, 1.7, 0.4],

[4.6, 3.4, 1.4, 0.3],

[5. , 3.4, 1.5, 0.2],

[4.4, 2.9, 1.4, 0.2],

[4.9, 3.1, 1.5, 0.1],

[5.4, 3.7, 1.5, 0.2],

[4.8, 3.4, 1.6, 0.2],

[4.8, 3. , 1.4, 0.1],

[4.3, 3. , 1.1, 0.1],

[5.8, 4. , 1.2, 0.2],

[5.7, 4.4, 1.5, 0.4],

[5.4, 3.9, 1.3, 0.4],

[5.1, 3.5, 1.4, 0.3],

[5.7, 3.8, 1.7, 0.3],

[5.1, 3.8, 1.5, 0.3],

[5.4, 3.4, 1.7, 0.2],

[5.1, 3.7, 1.5, 0.4],

[4.6, 3.6, 1. , 0.2],

[5.1, 3.3, 1.7, 0.5],

[4.8, 3.4, 1.9, 0.2],

[5. , 3. , 1.6, 0.2],

[5. , 3.4, 1.6, 0.4],

[5.2, 3.5, 1.5, 0.2],

[5.2, 3.4, 1.4, 0.2],

[4.7, 3.2, 1.6, 0.2],

[4.8, 3.1, 1.6, 0.2],

[5.4, 3.4, 1.5, 0.4],

[5.2, 4.1, 1.5, 0.1],

[5.5, 4.2, 1.4, 0.2],

[4.9, 3.1, 1.5, 0.2],

[5. , 3.2, 1.2, 0.2],

[5.5, 3.5, 1.3, 0.2],

[4.9, 3.6, 1.4, 0.1],

[4.4, 3. , 1.3, 0.2],

[5.1, 3.4, 1.5, 0.2],

[5. , 3.5, 1.3, 0.3],

[4.5, 2.3, 1.3, 0.3],

[4.4, 3.2, 1.3, 0.2],

[5. , 3.5, 1.6, 0.6],

[5.1, 3.8, 1.9, 0.4],

[4.8, 3. , 1.4, 0.3],

[5.1, 3.8, 1.6, 0.2],

[4.6, 3.2, 1.4, 0.2],

[5.3, 3.7, 1.5, 0.2],

[5. , 3.3, 1.4, 0.2],

[7. , 3.2, 4.7, 1.4],

[6.4, 3.2, 4.5, 1.5],

[6.9, 3.1, 4.9, 1.5],

[5.5, 2.3, 4. , 1.3],

[6.5, 2.8, 4.6, 1.5],

[5.7, 2.8, 4.5, 1.3],

[6.3, 3.3, 4.7, 1.6],

[4.9, 2.4, 3.3, 1. ],

[6.6, 2.9, 4.6, 1.3],

[5.2, 2.7, 3.9, 1.4],

[5. , 2. , 3.5, 1. ],

[5.9, 3. , 4.2, 1.5],

[6. , 2.2, 4. , 1. ],

[6.1, 2.9, 4.7, 1.4],

[5.6, 2.9, 3.6, 1.3],

[6.7, 3.1, 4.4, 1.4],

[5.6, 3. , 4.5, 1.5],

[5.8, 2.7, 4.1, 1. ],

[6.2, 2.2, 4.5, 1.5],

[5.6, 2.5, 3.9, 1.1],

[5.9, 3.2, 4.8, 1.8],

[6.1, 2.8, 4. , 1.3],

[6.3, 2.5, 4.9, 1.5],

[6.1, 2.8, 4.7, 1.2],

[6.4, 2.9, 4.3, 1.3],

[6.6, 3. , 4.4, 1.4],

[6.8, 2.8, 4.8, 1.4],

[6.7, 3. , 5. , 1.7],

[6. , 2.9, 4.5, 1.5],

[5.7, 2.6, 3.5, 1. ],

[5.5, 2.4, 3.8, 1.1],

[5.5, 2.4, 3.7, 1. ],

[5.8, 2.7, 3.9, 1.2],

[6. , 2.7, 5.1, 1.6],

[5.4, 3. , 4.5, 1.5],

[6. , 3.4, 4.5, 1.6],

[6.7, 3.1, 4.7, 1.5],

[6.3, 2.3, 4.4, 1.3],

[5.6, 3. , 4.1, 1.3],

[5.5, 2.5, 4. , 1.3],

[5.5, 2.6, 4.4, 1.2],

[6.1, 3. , 4.6, 1.4],

[5.8, 2.6, 4. , 1.2],

[5. , 2.3, 3.3, 1. ],

[5.6, 2.7, 4.2, 1.3],

[5.7, 3. , 4.2, 1.2],

[5.7, 2.9, 4.2, 1.3],

[6.2, 2.9, 4.3, 1.3],

[5.1, 2.5, 3. , 1.1],

[5.7, 2.8, 4.1, 1.3],

[6.3, 3.3, 6. , 2.5],

[5.8, 2.7, 5.1, 1.9],

[7.1, 3. , 5.9, 2.1],

[6.3, 2.9, 5.6, 1.8],

[6.5, 3. , 5.8, 2.2],

[7.6, 3. , 6.6, 2.1],

[4.9, 2.5, 4.5, 1.7],

[7.3, 2.9, 6.3, 1.8],

[6.7, 2.5, 5.8, 1.8],

[7.2, 3.6, 6.1, 2.5],

[6.5, 3.2, 5.1, 2. ],

[6.4, 2.7, 5.3, 1.9],

[6.8, 3. , 5.5, 2.1],

[5.7, 2.5, 5. , 2. ],

[5.8, 2.8, 5.1, 2.4],

[6.4, 3.2, 5.3, 2.3],

[6.5, 3. , 5.5, 1.8],

[7.7, 3.8, 6.7, 2.2],

[7.7, 2.6, 6.9, 2.3],

[6. , 2.2, 5. , 1.5],

[6.9, 3.2, 5.7, 2.3],

[5.6, 2.8, 4.9, 2. ],

[7.7, 2.8, 6.7, 2. ],

[6.3, 2.7, 4.9, 1.8],

[6.7, 3.3, 5.7, 2.1],

[7.2, 3.2, 6. , 1.8],

[6.2, 2.8, 4.8, 1.8],

[6.1, 3. , 4.9, 1.8],

[6.4, 2.8, 5.6, 2.1],

[7.2, 3. , 5.8, 1.6],

[7.4, 2.8, 6.1, 1.9],

[7.9, 3.8, 6.4, 2. ],

[6.4, 2.8, 5.6, 2.2],

[6.3, 2.8, 5.1, 1.5],

[6.1, 2.6, 5.6, 1.4],

[7.7, 3. , 6.1, 2.3],

[6.3, 3.4, 5.6, 2.4],

[6.4, 3.1, 5.5, 1.8],

[6. , 3. , 4.8, 1.8],

[6.9, 3.1, 5.4, 2.1],

[6.7, 3.1, 5.6, 2.4],

[6.9, 3.1, 5.1, 2.3],

[5.8, 2.7, 5.1, 1.9],

[6.8, 3.2, 5.9, 2.3],

[6.7, 3.3, 5.7, 2.5],

[6.7, 3. , 5.2, 2.3],

[6.3, 2.5, 5. , 1.9],

[6.5, 3. , 5.2, 2. ],

[6.2, 3.4, 5.4, 2.3],

[5.9, 3. , 5.1, 1.8]])

iris.feature_names

>>>

['sepal length (cm)',

'sepal width (cm)',

'petal length (cm)',

'petal width (cm)']

iris_df = pd.DataFrame(data=iris_data, columns=iris.feature_names)

print('feature 들의 평균 값')

print(iris_df.mean(), '\n')

print('feature 들의 분산 값')

print(iris_df.var())

>>>

feature 들의 평균 값

sepal length (cm) 5.843333

sepal width (cm) 3.057333

petal length (cm) 3.758000

petal width (cm) 1.199333

dtype: float64

feature 들의 분산 값

sepal length (cm) 0.685694

sepal width (cm) 0.189979

petal length (cm) 3.116278

petal width (cm) 0.581006

dtype: float64

from sklearn.preprocessing import StandardScaler

# StandardScaler객체 생성

scaler = StandardScaler()

# StandardScaler 로 데이터 셋 변환. fit( ) 과 transform( ) 호출.

scaler.fit(iris_df)

iris_scaled = scaler.transform(iris_df)

iris_scaled더보기

array([[-9.00681170e-01, 1.01900435e+00, -1.34022653e+00,

-1.31544430e+00],

[-1.14301691e+00, -1.31979479e-01, -1.34022653e+00,

-1.31544430e+00],

[-1.38535265e+00, 3.28414053e-01, -1.39706395e+00,

-1.31544430e+00],

[-1.50652052e+00, 9.82172869e-02, -1.28338910e+00,

-1.31544430e+00],

[-1.02184904e+00, 1.24920112e+00, -1.34022653e+00,

-1.31544430e+00],

[-5.37177559e-01, 1.93979142e+00, -1.16971425e+00,

-1.05217993e+00],

[-1.50652052e+00, 7.88807586e-01, -1.34022653e+00,

-1.18381211e+00],

[-1.02184904e+00, 7.88807586e-01, -1.28338910e+00,

-1.31544430e+00],

[-1.74885626e+00, -3.62176246e-01, -1.34022653e+00,

-1.31544430e+00],

[-1.14301691e+00, 9.82172869e-02, -1.28338910e+00,

-1.44707648e+00],

[-5.37177559e-01, 1.47939788e+00, -1.28338910e+00,

-1.31544430e+00],

[-1.26418478e+00, 7.88807586e-01, -1.22655167e+00,

-1.31544430e+00],

[-1.26418478e+00, -1.31979479e-01, -1.34022653e+00,

-1.44707648e+00],

[-1.87002413e+00, -1.31979479e-01, -1.51073881e+00,

-1.44707648e+00],

[-5.25060772e-02, 2.16998818e+00, -1.45390138e+00,

-1.31544430e+00],

[-1.73673948e-01, 3.09077525e+00, -1.28338910e+00,

-1.05217993e+00],

[-5.37177559e-01, 1.93979142e+00, -1.39706395e+00,

-1.05217993e+00],

[-9.00681170e-01, 1.01900435e+00, -1.34022653e+00,

-1.18381211e+00],

[-1.73673948e-01, 1.70959465e+00, -1.16971425e+00,

-1.18381211e+00],

[-9.00681170e-01, 1.70959465e+00, -1.28338910e+00,

-1.18381211e+00],

[-5.37177559e-01, 7.88807586e-01, -1.16971425e+00,

-1.31544430e+00],

[-9.00681170e-01, 1.47939788e+00, -1.28338910e+00,

-1.05217993e+00],

[-1.50652052e+00, 1.24920112e+00, -1.56757623e+00,

-1.31544430e+00],

[-9.00681170e-01, 5.58610819e-01, -1.16971425e+00,

-9.20547742e-01],

[-1.26418478e+00, 7.88807586e-01, -1.05603939e+00,

-1.31544430e+00],

[-1.02184904e+00, -1.31979479e-01, -1.22655167e+00,

-1.31544430e+00],

[-1.02184904e+00, 7.88807586e-01, -1.22655167e+00,

-1.05217993e+00],

[-7.79513300e-01, 1.01900435e+00, -1.28338910e+00,

-1.31544430e+00],

[-7.79513300e-01, 7.88807586e-01, -1.34022653e+00,

-1.31544430e+00],

[-1.38535265e+00, 3.28414053e-01, -1.22655167e+00,

-1.31544430e+00],

[-1.26418478e+00, 9.82172869e-02, -1.22655167e+00,

-1.31544430e+00],

[-5.37177559e-01, 7.88807586e-01, -1.28338910e+00,

-1.05217993e+00],

[-7.79513300e-01, 2.40018495e+00, -1.28338910e+00,

-1.44707648e+00],

[-4.16009689e-01, 2.63038172e+00, -1.34022653e+00,

-1.31544430e+00],

[-1.14301691e+00, 9.82172869e-02, -1.28338910e+00,

-1.31544430e+00],

[-1.02184904e+00, 3.28414053e-01, -1.45390138e+00,

-1.31544430e+00],

[-4.16009689e-01, 1.01900435e+00, -1.39706395e+00,

-1.31544430e+00],

[-1.14301691e+00, 1.24920112e+00, -1.34022653e+00,

-1.44707648e+00],

[-1.74885626e+00, -1.31979479e-01, -1.39706395e+00,

-1.31544430e+00],

[-9.00681170e-01, 7.88807586e-01, -1.28338910e+00,

-1.31544430e+00],

[-1.02184904e+00, 1.01900435e+00, -1.39706395e+00,

-1.18381211e+00],

[-1.62768839e+00, -1.74335684e+00, -1.39706395e+00,

-1.18381211e+00],

[-1.74885626e+00, 3.28414053e-01, -1.39706395e+00,

-1.31544430e+00],

[-1.02184904e+00, 1.01900435e+00, -1.22655167e+00,

-7.88915558e-01],

[-9.00681170e-01, 1.70959465e+00, -1.05603939e+00,

-1.05217993e+00],

[-1.26418478e+00, -1.31979479e-01, -1.34022653e+00,

-1.18381211e+00],

[-9.00681170e-01, 1.70959465e+00, -1.22655167e+00,

-1.31544430e+00],

[-1.50652052e+00, 3.28414053e-01, -1.34022653e+00,

-1.31544430e+00],

[-6.58345429e-01, 1.47939788e+00, -1.28338910e+00,

-1.31544430e+00],

[-1.02184904e+00, 5.58610819e-01, -1.34022653e+00,

-1.31544430e+00],

[ 1.40150837e+00, 3.28414053e-01, 5.35408562e-01,

2.64141916e-01],

[ 6.74501145e-01, 3.28414053e-01, 4.21733708e-01,

3.95774101e-01],

[ 1.28034050e+00, 9.82172869e-02, 6.49083415e-01,

3.95774101e-01],

[-4.16009689e-01, -1.74335684e+00, 1.37546573e-01,

1.32509732e-01],

[ 7.95669016e-01, -5.92373012e-01, 4.78571135e-01,

3.95774101e-01],

[-1.73673948e-01, -5.92373012e-01, 4.21733708e-01,

1.32509732e-01],

[ 5.53333275e-01, 5.58610819e-01, 5.35408562e-01,

5.27406285e-01],

[-1.14301691e+00, -1.51316008e+00, -2.60315415e-01,

-2.62386821e-01],

[ 9.16836886e-01, -3.62176246e-01, 4.78571135e-01,

1.32509732e-01],

[-7.79513300e-01, -8.22569778e-01, 8.07091462e-02,

2.64141916e-01],

[-1.02184904e+00, -2.43394714e+00, -1.46640561e-01,

-2.62386821e-01],

[ 6.86617933e-02, -1.31979479e-01, 2.51221427e-01,

3.95774101e-01],

[ 1.89829664e-01, -1.97355361e+00, 1.37546573e-01,

-2.62386821e-01],

[ 3.10997534e-01, -3.62176246e-01, 5.35408562e-01,

2.64141916e-01],

[-2.94841818e-01, -3.62176246e-01, -8.98031345e-02,

1.32509732e-01],

[ 1.03800476e+00, 9.82172869e-02, 3.64896281e-01,

2.64141916e-01],

[-2.94841818e-01, -1.31979479e-01, 4.21733708e-01,

3.95774101e-01],

[-5.25060772e-02, -8.22569778e-01, 1.94384000e-01,

-2.62386821e-01],

[ 4.32165405e-01, -1.97355361e+00, 4.21733708e-01,

3.95774101e-01],

[-2.94841818e-01, -1.28296331e+00, 8.07091462e-02,

-1.30754636e-01],

[ 6.86617933e-02, 3.28414053e-01, 5.92245988e-01,

7.90670654e-01],

[ 3.10997534e-01, -5.92373012e-01, 1.37546573e-01,

1.32509732e-01],

[ 5.53333275e-01, -1.28296331e+00, 6.49083415e-01,

3.95774101e-01],

[ 3.10997534e-01, -5.92373012e-01, 5.35408562e-01,

8.77547895e-04],

[ 6.74501145e-01, -3.62176246e-01, 3.08058854e-01,

1.32509732e-01],

[ 9.16836886e-01, -1.31979479e-01, 3.64896281e-01,

2.64141916e-01],

[ 1.15917263e+00, -5.92373012e-01, 5.92245988e-01,

2.64141916e-01],

[ 1.03800476e+00, -1.31979479e-01, 7.05920842e-01,

6.59038469e-01],

[ 1.89829664e-01, -3.62176246e-01, 4.21733708e-01,

3.95774101e-01],

[-1.73673948e-01, -1.05276654e+00, -1.46640561e-01,

-2.62386821e-01],

[-4.16009689e-01, -1.51316008e+00, 2.38717193e-02,

-1.30754636e-01],

[-4.16009689e-01, -1.51316008e+00, -3.29657076e-02,

-2.62386821e-01],

[-5.25060772e-02, -8.22569778e-01, 8.07091462e-02,

8.77547895e-04],

[ 1.89829664e-01, -8.22569778e-01, 7.62758269e-01,

5.27406285e-01],

[-5.37177559e-01, -1.31979479e-01, 4.21733708e-01,

3.95774101e-01],

[ 1.89829664e-01, 7.88807586e-01, 4.21733708e-01,

5.27406285e-01],

[ 1.03800476e+00, 9.82172869e-02, 5.35408562e-01,

3.95774101e-01],

[ 5.53333275e-01, -1.74335684e+00, 3.64896281e-01,

1.32509732e-01],

[-2.94841818e-01, -1.31979479e-01, 1.94384000e-01,

1.32509732e-01],

[-4.16009689e-01, -1.28296331e+00, 1.37546573e-01,

1.32509732e-01],

[-4.16009689e-01, -1.05276654e+00, 3.64896281e-01,

8.77547895e-04],

[ 3.10997534e-01, -1.31979479e-01, 4.78571135e-01,

2.64141916e-01],

[-5.25060772e-02, -1.05276654e+00, 1.37546573e-01,

8.77547895e-04],

[-1.02184904e+00, -1.74335684e+00, -2.60315415e-01,

-2.62386821e-01],

[-2.94841818e-01, -8.22569778e-01, 2.51221427e-01,

1.32509732e-01],

[-1.73673948e-01, -1.31979479e-01, 2.51221427e-01,

8.77547895e-04],

[-1.73673948e-01, -3.62176246e-01, 2.51221427e-01,

1.32509732e-01],

[ 4.32165405e-01, -3.62176246e-01, 3.08058854e-01,

1.32509732e-01],

[-9.00681170e-01, -1.28296331e+00, -4.30827696e-01,

-1.30754636e-01],

[-1.73673948e-01, -5.92373012e-01, 1.94384000e-01,

1.32509732e-01],

[ 5.53333275e-01, 5.58610819e-01, 1.27429511e+00,

1.71209594e+00],

[-5.25060772e-02, -8.22569778e-01, 7.62758269e-01,

9.22302838e-01],

[ 1.52267624e+00, -1.31979479e-01, 1.21745768e+00,

1.18556721e+00],

[ 5.53333275e-01, -3.62176246e-01, 1.04694540e+00,

7.90670654e-01],

[ 7.95669016e-01, -1.31979479e-01, 1.16062026e+00,

1.31719939e+00],

[ 2.12851559e+00, -1.31979479e-01, 1.61531967e+00,

1.18556721e+00],

[-1.14301691e+00, -1.28296331e+00, 4.21733708e-01,

6.59038469e-01],

[ 1.76501198e+00, -3.62176246e-01, 1.44480739e+00,

7.90670654e-01],

[ 1.03800476e+00, -1.28296331e+00, 1.16062026e+00,

7.90670654e-01],

[ 1.64384411e+00, 1.24920112e+00, 1.33113254e+00,

1.71209594e+00],

[ 7.95669016e-01, 3.28414053e-01, 7.62758269e-01,

1.05393502e+00],

[ 6.74501145e-01, -8.22569778e-01, 8.76433123e-01,

9.22302838e-01],

[ 1.15917263e+00, -1.31979479e-01, 9.90107977e-01,

1.18556721e+00],

[-1.73673948e-01, -1.28296331e+00, 7.05920842e-01,

1.05393502e+00],

[-5.25060772e-02, -5.92373012e-01, 7.62758269e-01,

1.58046376e+00],

[ 6.74501145e-01, 3.28414053e-01, 8.76433123e-01,

1.44883158e+00],

[ 7.95669016e-01, -1.31979479e-01, 9.90107977e-01,

7.90670654e-01],

[ 2.24968346e+00, 1.70959465e+00, 1.67215710e+00,

1.31719939e+00],

[ 2.24968346e+00, -1.05276654e+00, 1.78583195e+00,

1.44883158e+00],

[ 1.89829664e-01, -1.97355361e+00, 7.05920842e-01,

3.95774101e-01],

[ 1.28034050e+00, 3.28414053e-01, 1.10378283e+00,

1.44883158e+00],

[-2.94841818e-01, -5.92373012e-01, 6.49083415e-01,

1.05393502e+00],

[ 2.24968346e+00, -5.92373012e-01, 1.67215710e+00,

1.05393502e+00],

[ 5.53333275e-01, -8.22569778e-01, 6.49083415e-01,

7.90670654e-01],

[ 1.03800476e+00, 5.58610819e-01, 1.10378283e+00,

1.18556721e+00],

[ 1.64384411e+00, 3.28414053e-01, 1.27429511e+00,

7.90670654e-01],

[ 4.32165405e-01, -5.92373012e-01, 5.92245988e-01,

7.90670654e-01],

[ 3.10997534e-01, -1.31979479e-01, 6.49083415e-01,

7.90670654e-01],

[ 6.74501145e-01, -5.92373012e-01, 1.04694540e+00,

1.18556721e+00],

[ 1.64384411e+00, -1.31979479e-01, 1.16062026e+00,

5.27406285e-01],

[ 1.88617985e+00, -5.92373012e-01, 1.33113254e+00,

9.22302838e-01],

[ 2.49201920e+00, 1.70959465e+00, 1.50164482e+00,

1.05393502e+00],

[ 6.74501145e-01, -5.92373012e-01, 1.04694540e+00,

1.31719939e+00],

[ 5.53333275e-01, -5.92373012e-01, 7.62758269e-01,

3.95774101e-01],

[ 3.10997534e-01, -1.05276654e+00, 1.04694540e+00,

2.64141916e-01],

[ 2.24968346e+00, -1.31979479e-01, 1.33113254e+00,

1.44883158e+00],

[ 5.53333275e-01, 7.88807586e-01, 1.04694540e+00,

1.58046376e+00],

[ 6.74501145e-01, 9.82172869e-02, 9.90107977e-01,

7.90670654e-01],

[ 1.89829664e-01, -1.31979479e-01, 5.92245988e-01,

7.90670654e-01],

[ 1.28034050e+00, 9.82172869e-02, 9.33270550e-01,

1.18556721e+00],

[ 1.03800476e+00, 9.82172869e-02, 1.04694540e+00,

1.58046376e+00],

[ 1.28034050e+00, 9.82172869e-02, 7.62758269e-01,

1.44883158e+00],

[-5.25060772e-02, -8.22569778e-01, 7.62758269e-01,

9.22302838e-01],

[ 1.15917263e+00, 3.28414053e-01, 1.21745768e+00,

1.44883158e+00],

[ 1.03800476e+00, 5.58610819e-01, 1.10378283e+00,

1.71209594e+00],

[ 1.03800476e+00, -1.31979479e-01, 8.19595696e-01,

1.44883158e+00],

[ 5.53333275e-01, -1.28296331e+00, 7.05920842e-01,

9.22302838e-01],

[ 7.95669016e-01, -1.31979479e-01, 8.19595696e-01,

1.05393502e+00],

[ 4.32165405e-01, 7.88807586e-01, 9.33270550e-01,

1.44883158e+00],

[ 6.86617933e-02, -1.31979479e-01, 7.62758269e-01,

7.90670654e-01]])

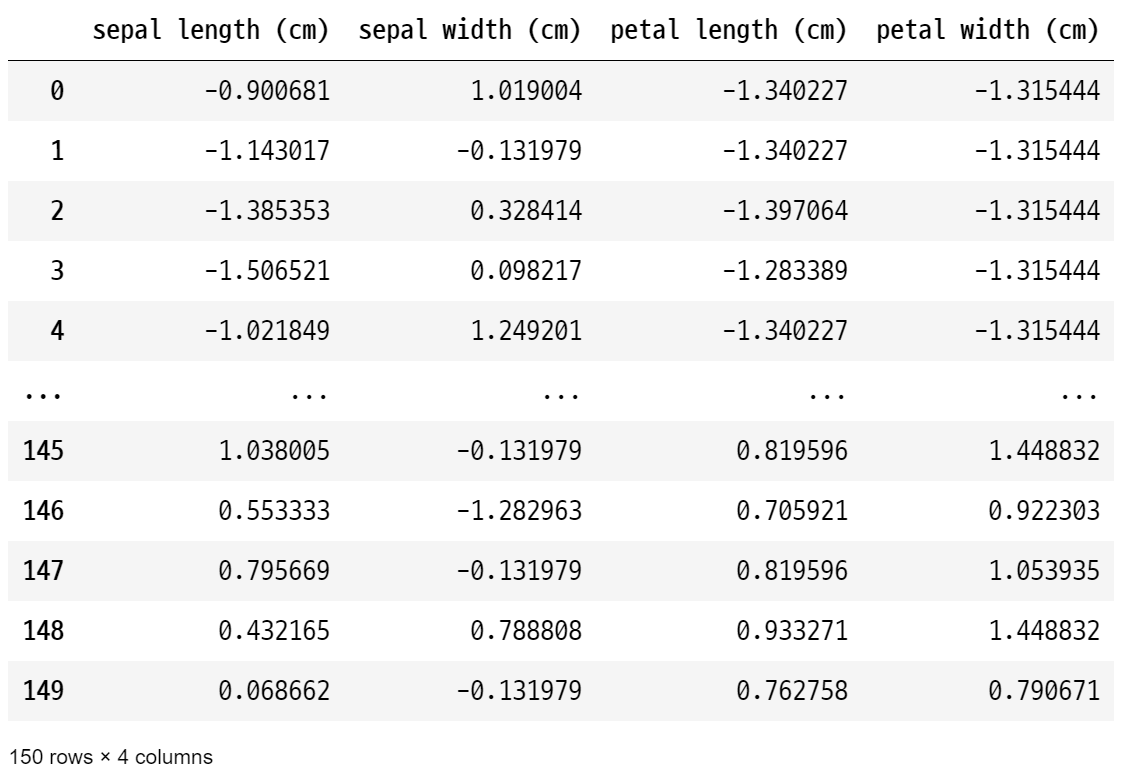

# transform( )시 scale 변환된 데이터 셋이 numpy ndarry로 반환되어 이를 DataFrame으로 변환

iris_df_scaled = pd.DataFrame(data=iris_scaled, columns=iris.feature_names)

iris_df_scaled

print('feature 들의 평균 값')

print(iris_df_scaled.mean(), '\n')

print('feature 들의 분산 값')

print(iris_df_scaled.var())

>>>

feature 들의 평균 값

sepal length (cm) -1.690315e-15

sepal width (cm) -1.842970e-15

petal length (cm) -1.698641e-15

petal width (cm) -1.409243e-15

dtype: float64

feature 들의 분산 값

sepal length (cm) 1.006711

sepal width (cm) 1.006711

petal length (cm) 1.006711

petal width (cm) 1.006711

dtype: float64

-> 모든 컬럼 값의 평균은 0에 아주 가까운 값으로, 분산은 1에 가까운 값으로 변환되었다.

4.2 (2) MinMaxScaler

from sklearn.preprocessing import MinMaxScaler

# MinMaxScaler객체 생성

scaler = MinMaxScaler()

# MinMaxScaler 로 데이터 셋 변환. fit() 과 transform() 호출.

scaler.fit(iris_df)

iris_scaled = scaler.transform(iris_df)

# transform()시 scale 변환된 데이터 셋이 numpy ndarry로 반환되어 이를 DataFrame으로 변환

iris_df_scaled = pd.DataFrame(data=iris_scaled, columns=iris.feature_names)

print('feature들의 최소 값')

print(iris_df_scaled.min(), '\n')

print('feature들의 최대 값')

print(iris_df_scaled.max())

>>>

feature들의 최소 값

sepal length (cm) 0.0

sepal width (cm) 0.0

petal length (cm) 0.0

petal width (cm) 0.0

dtype: float64

feature들의 최대 값

sepal length (cm) 1.0

sepal width (cm) 1.0

petal length (cm) 1.0

petal width (cm) 1.0

dtype: float64

모든 피처의 값이 0~1 사이로 변환되었다.

-> 표준화, 정규화로 머신러닝을 돌리면, 기존의 데이터와 다른 결과가 나온다.

그 중에서 성능을 비교하여 성능이 잘 나오는 것을 선택하면 된다!

'Machine Learning > 머신러닝 완벽가이드 for Python' 카테고리의 다른 글

| ch.3.2 오차행렬과 정밀도, 재현율 소개 (0) | 2022.10.06 |

|---|---|

| ch.3.1 평가(Evaluation) - 정확도(accuracy) (0) | 2022.10.06 |

| Ch.2.5 데이터 전처리 (0) | 2022.10.06 |

| ch 2.4 sklearn.model_selection(train_test_split, 교차검증, GridSearchCV) (실습) (1) | 2022.10.05 |

| ch.2.4 sklearn.model_selection (1) | 2022.10.05 |